In a “containerized” world running “cloud native” applications, there is the need for a containers orchestrator as Kubernetes which is the best open source platform providing such features. Furthermore, Red Hat pushed this project to an enterprise-grade level developing OpenShift (on top of Kubernetes) adding more powerful features in order to simplify the applications development pipeline. Today, thanks to this platforms, you can easily move your containerized applications (or microservices based solutions) from an on-premise installation to the cloud without changing anything on the development side; it’s just a matter of having a Kubernetes or OpenShift cluster up and running and moving your container from one side to the other.

As already announced during the latest Red Hat Summit in San Francisco, in order to improve the developers experience, Red Hat and Microsoft, are working side by side for providing a managed OpenShift service (as it is already available for Kubernetes with AKS); you can read more about this effort here.

But … while waiting for having this awesome and really easy solution, what’s the way for deploying an OpenShift cluster on Azure today?

Microsoft is already providing some good documentation for helping you on doing that for both the open source OKD project (Origin Community Distribution of Kubernetes, formerly known as OpenShift Origin) and the Red Hat productized version named OCP (OpenShift Container Platform) if you have a Red Hat subscription. Furthermore, there are two different GitHub repositories which provide templates for deploying both: the openshift-origin and the openshift-container-platform.

In this blog post, I’d like to describe my personal experience about deploying OKD on Azure trying to summarize and explain all the needed steps for having OpenShift up and running in the cloud.

Get the Azure template

First of all, you have to clone the openshift-origin repository, available under the Microsoft GitHub organization, which provides the deployment template (in JSON format) for deploying OKD.

git clone https://github.com/Microsoft/openshift-origin.git

Because the master branch contains the most current release of OKD with experimental items, this may cause instability but includes new things. For this reason, it’s better switching to one of the branches for using one of the stable releases, for example, the 3.9:

git checkout -b release-3.9 origin/release-3.9

This repository contains the ARM (Azure Resource Manager) template file for deploying the needed Azure resources, the related configurable parameters file and the Ansible scripts for preparing the nodes and deploying the OpenShift cluster.

You will come back to use the artifacts provided in this repository later because there are some other steps you need to do as prerequisites for the final deployment.

Generate SSH key

In order to secure access to the OpenShift cluster (to the master node), an SSH key pair is needed (without a passphrase). Once you have finished deploying the cluster, you can always generate new keys that use a passphrase and replace the original ones used during initial deployment. The following command shows how it’s possible using the ssh-keygen tool on Linux and macOS (for doing the same on Windows, follow here).

ssh-keygen -f ~/.ssh/openshift_rsa -t rsa -N ''

The above command will store the SSH key pair, generated using the RSA algorithm (-t rsa) and without a passphrase (-N ”), in the openshift_rsa file.

In order to make the SSH private key available in the Azure cloud, we are going to use the Azure Key Vault service as described in the next step.

Store SSH Private Key in Azure Key Vault

Azure Key Vault is used for storing cryptographic keys and other secrets used by cloud applications and services. It’s useful to create a dedicated resource group for hosting the Key Vault instance (this group is different from the one for deploying the OpenShift cluster).

az group create --name keyvaultgroup --location northeurope

Next step is to create the Key Vault in the above group.

az keyvault create --resource-group keyvaultgroup --name keyvaultopenshiftazure --location northeurope --enabled-for-template-deployment true

The Key Vault name must be globally unique (so not just inside the just created resource group).

Finally, you have to store the SSH key into the Key Vault for making it accessible during the deployment process.

az keyvault secret set --vault-name keyvaultopenshiftazure --name openshiftazuresecret --file ~/.ssh/openshift_rsa

Create an Azure Active Directory service principal

Because OpenShift communicates with Azure, you need to give it the permissions for executing operations and it’s possible creating a service principal under Azure Active Directory.

The first step is to create the resource group where we are going to deploy the OpenShift cluster. The service principal will assign the contributor permissions to this resource group.

az group create --name openshiftazuregroup --location northeurope

Finally, the service principal creation.

az ad sp create-for-rbac --name openshiftazuresp --role Contributor --password mypassword --scopes /subscriptions//resourceGroups/openshiftazuregroup

From the JSON output, it’s important to take a note of the appId field which will be used as aadClientId parameter in the deployment template.

Customize and deploy the Azure template

Back to the openshift-origin GitHub repository, we have cloned before, the azuredeploy.parameters.json file provides all the parameters for the deployment. All the corresponding possible values are defined in the related azuredeploy.json file.

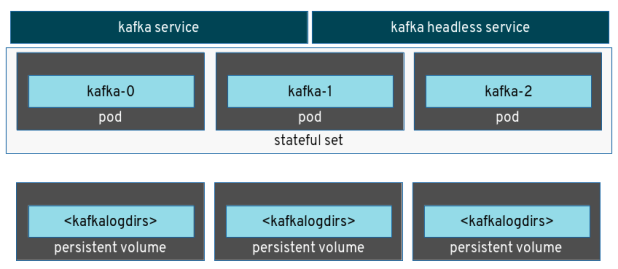

This file defines the deployment template and it describes all the Azure resources that will be deployed for making the OpenShift cluster such as virtual machines for the nodes, virtual networks, storage accounts, network interfaces, load balancers, public IP addresses (for master and infra node) and so on. It also describes the parameters with the related possible values.

Taking a look at the parameters JSON file there are some parameters with a “changeme” value. Of course, it means that we have to assign meaningful values to these parameters because they make the main customizable part of the deployment itself:

- openshiftClusterPrefix: a prefix used for assigning hostnames for all nodes – master, infra and nodes.

- adminUsername: admin username for both operating system login and OpenShift login

- openshiftPassword: password for the OpenShift login

- sshPublicKey: the SSH public key generated in the previous steps (the content of the ~/.ssh/openshift_rsa.pub file)

- keyVaultResourceGroup: the name of the resource group that contains the Key Vault

- keyVaultName: the name of the Key Vault you created

- keyVaultSecret: the Secret Name you used when creating the Secret (that contains the Private Key)

- aadClientId: the Azure Active Directory Client ID you should have noted when the service principal was created

- aadClientSecret: the Azure Active Directory service principal secret/password chosen on creation

Other useful parameters are:

- masterVmSize, infraVmSize and nodeVmSize: size for VMs related to the master, infra and worker nodes

-

masterInstanceCount, masterInstanceCount and masterInstanceCount: number of master, infra and worker nodes

All the deployed nodes run CentOS as the operating system.

Instead of replacing the values in the original parameters file, it’s better making a copy and renaming it, for example, as azuredeploy.parameters.local.json.

After configuring the template parameters, it’s possible to deploy the cluster running the following command from inside the GitHub repository directory (for using the contained JSON template file and the related parameters file).

az group deployment create -g openshiftazuregroup --name myopenshiftcluster --template-file azuredeploy.json --parameters @azuredeploy.parameters.local.json

This command could take quite a long time depending on the number of nodes and their size and at the end, it will show the output in JSON format from which the two main fields (in the “outputs” section) are:

- openshift Console Url: it’s the URL of the OpenShift web console you can access using the configured adminUsername and openshiftPassword

- openshift Master SSH: contains information for accessing to the master node via SSH

- openshift Infra Load Balancer FQDN: contains the URL for accessing the infra node

In order to access the OpenShift web console, you can just copy and paste the “openshift Console Url” value in your preferred browser.

In the same way, you can access the OpenShift master node by just copying and pasting the “openshift Master SSH” value in a terminal.

Once on the master node, you can start to use the oc tool for interacting with the OpenShift cluster, for example showing the available nodes as follows.

You can notice that these URLs don’t have really friendly names and it’s because the related variables in the template for defining them are:

"infraLbPublicIpDnsLabel": "[concat('infradns', uniqueString(concat(resourceGroup().id, 'infra')))]",

"openshiftMasterPublicIpDnsLabel": "[concat('masterdns', uniqueString(concat(resourceGroup().id, 'master')))]"

Conclusion

Nowadays, as you can see, following a bunch of simple steps and leveraging the Azure template provided by Microsoft and Red Hat, it’s not so difficult to have an OpenShift cluster up and running in the Azure cloud. If you think about all the resources that need to be deployed (nodes, virtual network, load balancers, network interfaces, storage, …), the process really simplifies the overall deployment. Of course, the developers’ life will be easier when Microsoft and Red Hat will announce the managed OpenShift offer.

Having an OpenShift cluster running in the cloud it’s just the beginning of the fun … so, now that you have it … enjoy developing your containerized applications!